Monitoring Sitemap of a Website Using a Crawler

Sitemap monitoring is currently in alpha and available in the Professional plan and above.

Table of Contents

- Introduction

- Sitemap Monitoring with Distill: How It Works

- Create and Monitor Sitemap for a Website

- Exclude Links for Crawling

- Frequency of Crawling

- Page Macro: Execute Steps Before a Page is Crawled

- URL Rewrite

- View and Manage Crawlers

- Import and Monitor Crawled URLs

- Export Crawled URLs

- How Crawler Data is Cleaned Up

Introduction

A sitemap is an essential tool that helps search engines understand the structure of a website and crawl its content. It usually contains all URLs of a website, including those that may not be easily discoverable by search engines or visitors.

There are different formats of sitemaps, but the most common and widely supported format is the XML sitemap. While XML sitemaps are widely used, they may not be updated regularly or generated automatically. So, if you want to receive updates about new links added to a website, monitoring its sitemap file may not reflect the current state, and you may be missing out on new or updated content. To overcome this limitation, you can use Distill’s crawler to index all URLs on a website. A crawler navigates through a website, discovering and indexing pages just like a search engine would. This ensures that all URLs are extracted from the website, even the hidden or dynamically generated pages.

Sitemap Monitoring with Distill: How It Works

You can keep track of URLs belonging to a particular website using sitemap monitoring. Distill achieves this by first creating a list of URLs through the use of crawlers. The crawlers start at the source URL and search for all links within it. These links are then added to a list, and the crawlers proceed to go through each link one by one, crawling them to search for any additional links on the new page. The crawlers continue this process, crawling all the links they find until there are no more left to crawl.

However, there are some exceptions to what links the crawlers will crawl. They will not crawl links outside of the website’s domain, links that are not a subpath of the domain, or links that match the regular expression mentioned in the Exclude option while adding. By following these rules, Distill is able to create a comprehensive and accurate list of all URLs belonging to a website that can be monitored for changes.

Create and Monitor Sitemap for a Website

If you need to monitor for any updates on links for a website, you only need to create a sitemap monitor. However, if you also need to monitor the contents of those URLs, follow these two steps:

- Crawling: Finds all the links present on a website and creates a list of crawled URLs. You can set up the frequency and exclusions for the crawlers at this stage. This creates the sitemap for the monitor.

- Monitoring: You can use the Import Monitors option under Change History to select crawled links from the list and import them into your Watchlist."

Website Link Monitoring with a Crawler

Here are the steps to monitor the sitemap using crawler:

-

Open the Watchlist from the Web app at https://monitor.distill.io

-

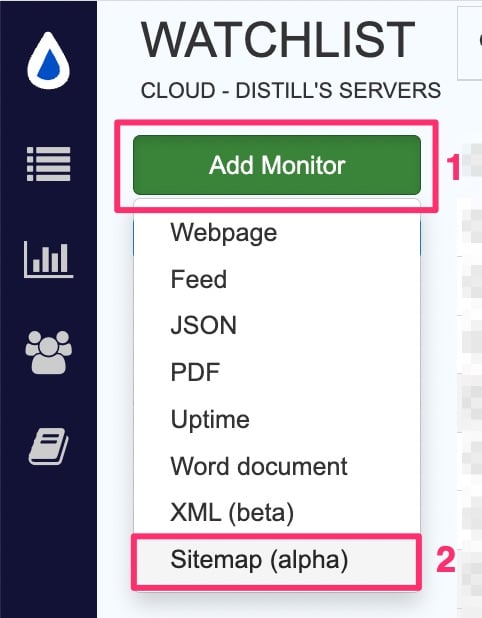

Click

Add Monitor->Sitemap.

-

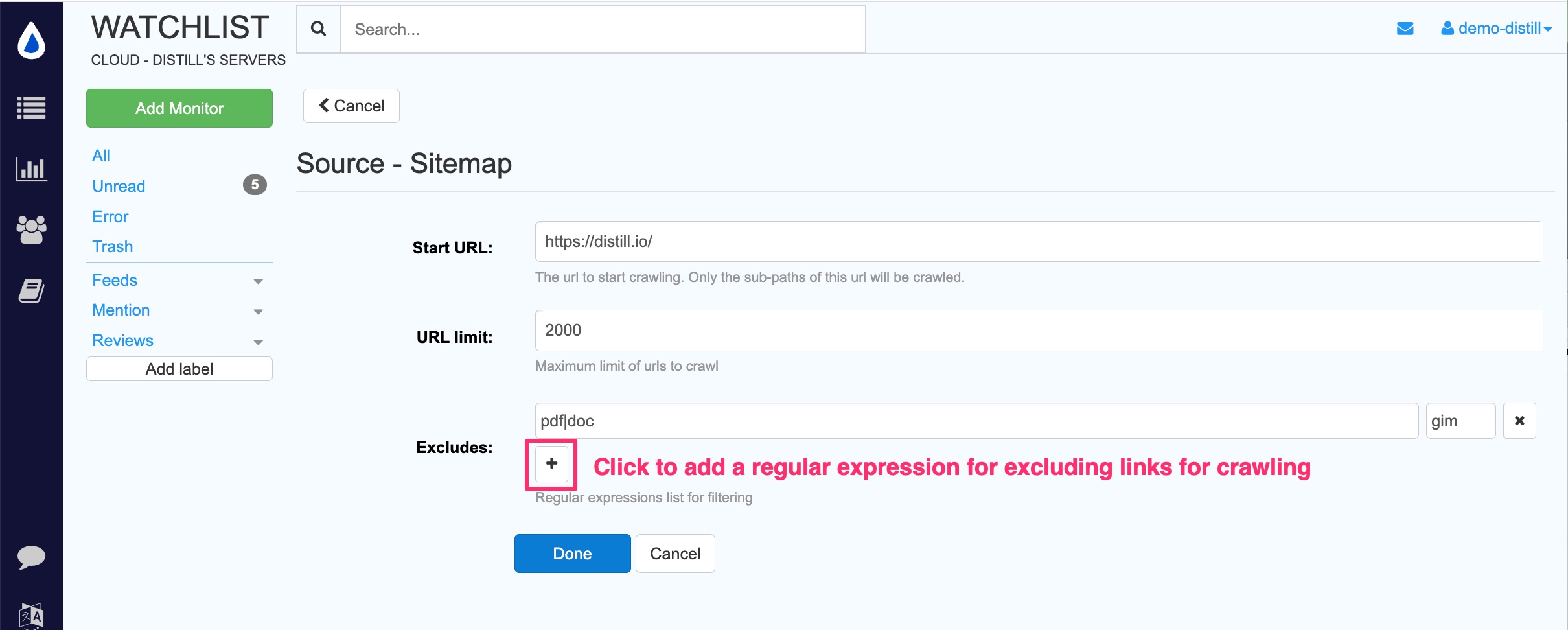

On the source page, add the Start URL. Links will be crawled from the same subdomain and the same subpath and not the full domain. So, you can add the URL from where you want to start the crawl. For example, if URL is https://distill.io, all subpaths like https://distill.io/blog, https://distill.io/help, etc will be crawled. But it will not crawl URLs like, https://forums.distill.io as it is a separate subdomain.

-

If you want to exclude any links for monitoring, you can use the regular expression filter as mentioned below for exclusion.

-

Click “Done”. It will open the Options page for the monitor’s configuration. You can configure actions and conditions at this page. Save when done.

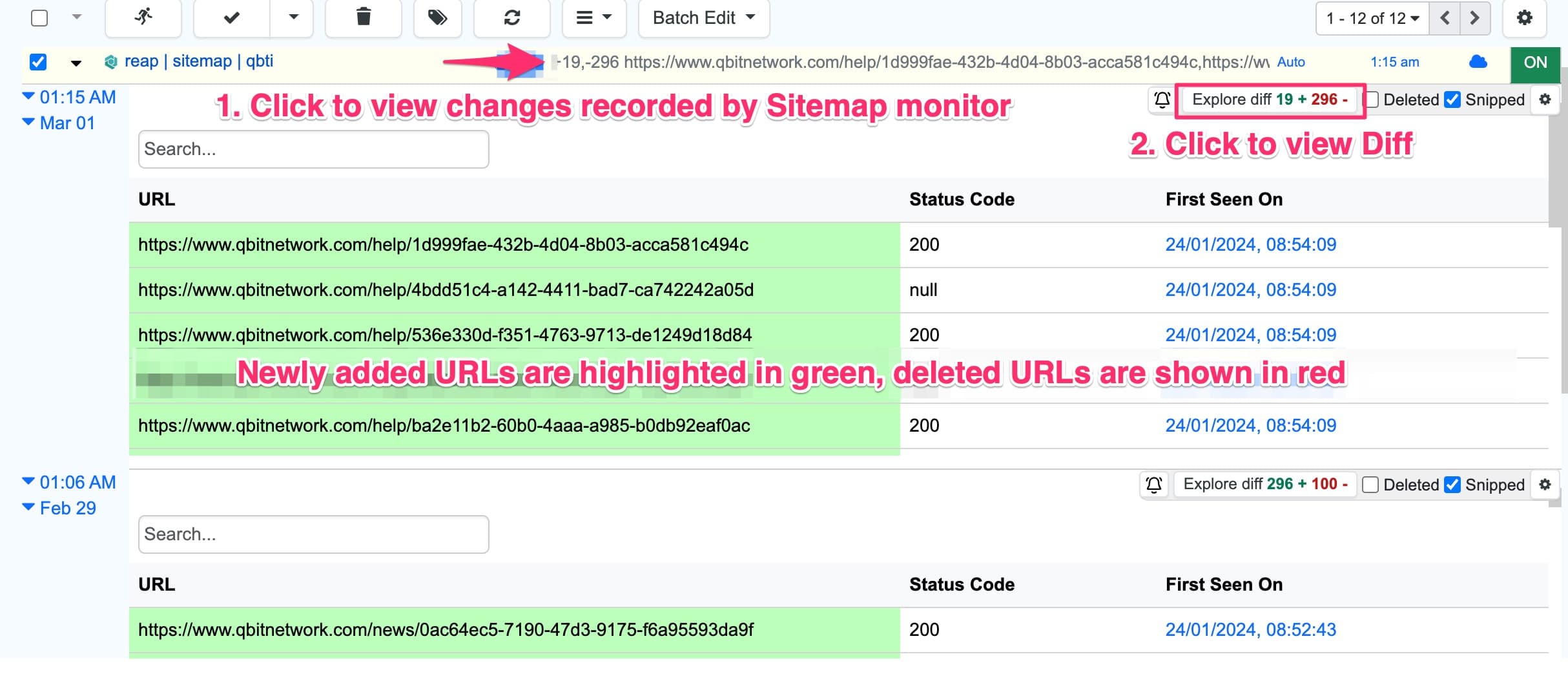

Whenever URLs are added or removed from the sitemap you will get a change alert. You can view the change history of a sitemap monitor by clicking on the preview.

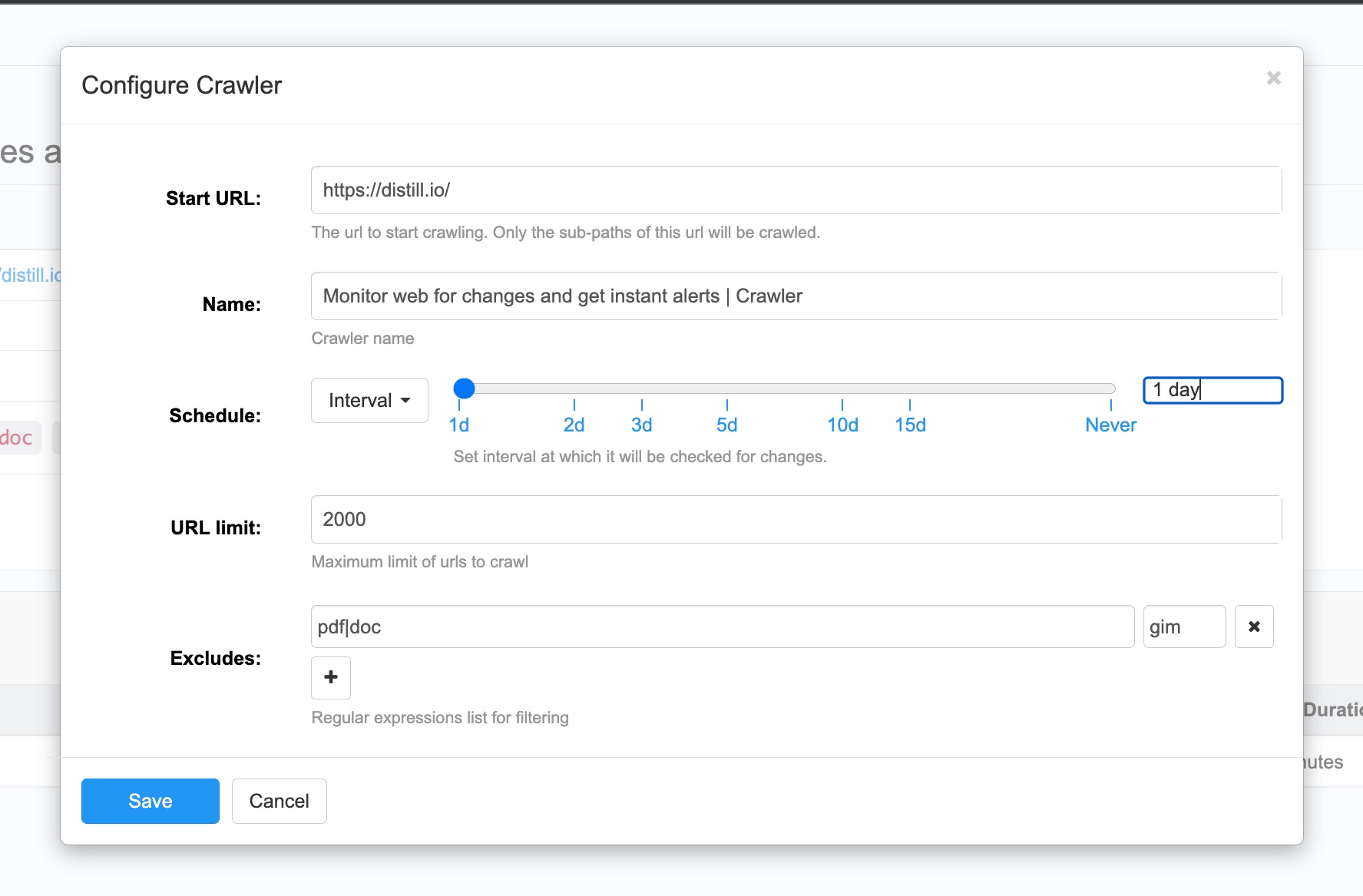

Exclude links for crawling

You can use the regular expression filter to exclude links from crawling. This option is available on the source page when you first set up a crawler for sitemap monitoring. Alternatively, you can modify the configuration of any existing crawler from the detail page. By default, all image links are excluded for crawling.

Frequency of crawling

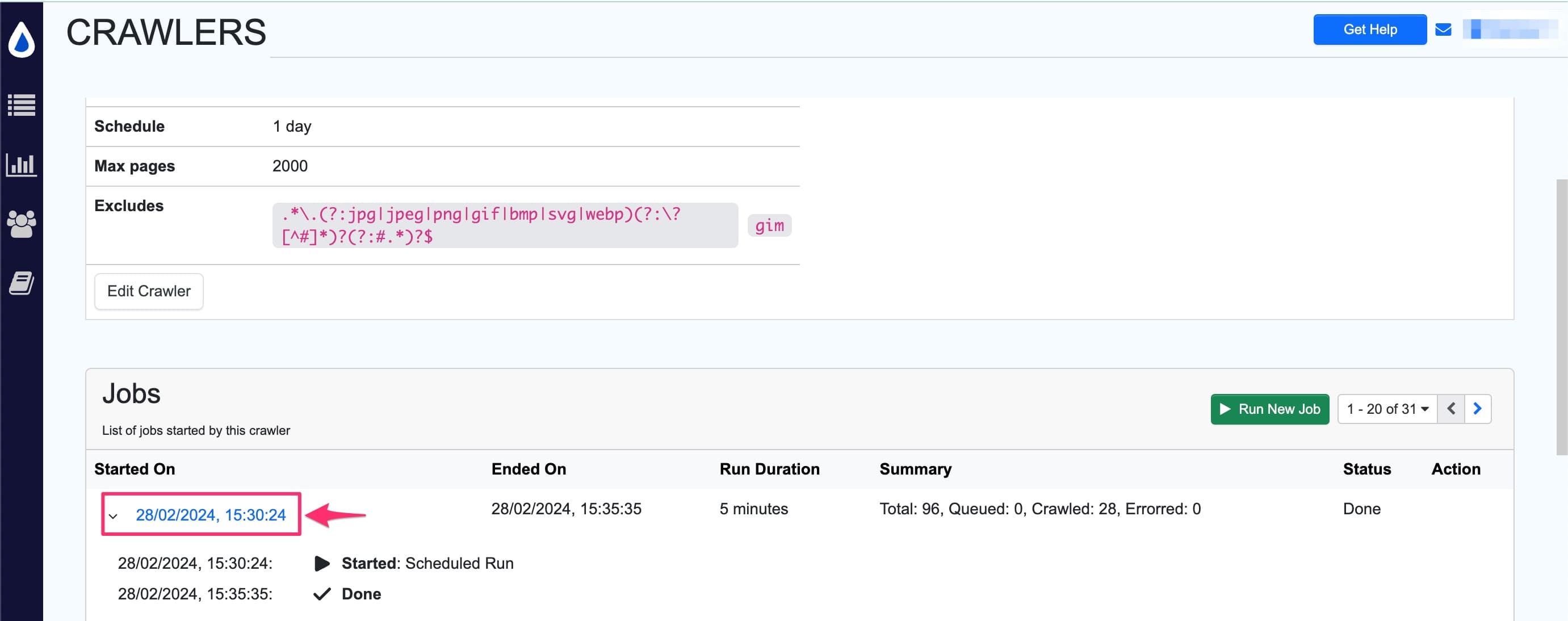

By default, the crawler frequency is set to 1 day. However, you have the option to modify the crawling frequency according to your requirements. You can do this by navigating to the crawler’s page as shown below:

Then you can click on the “Edit Crawler” option and change the schedule.

Page Macro: Execute steps before a page is Crawled

Before initiating a crawl, it is often necessary to perform validations on the page. The macro will run on each page before crawling them.

Steps to add Page Macros:

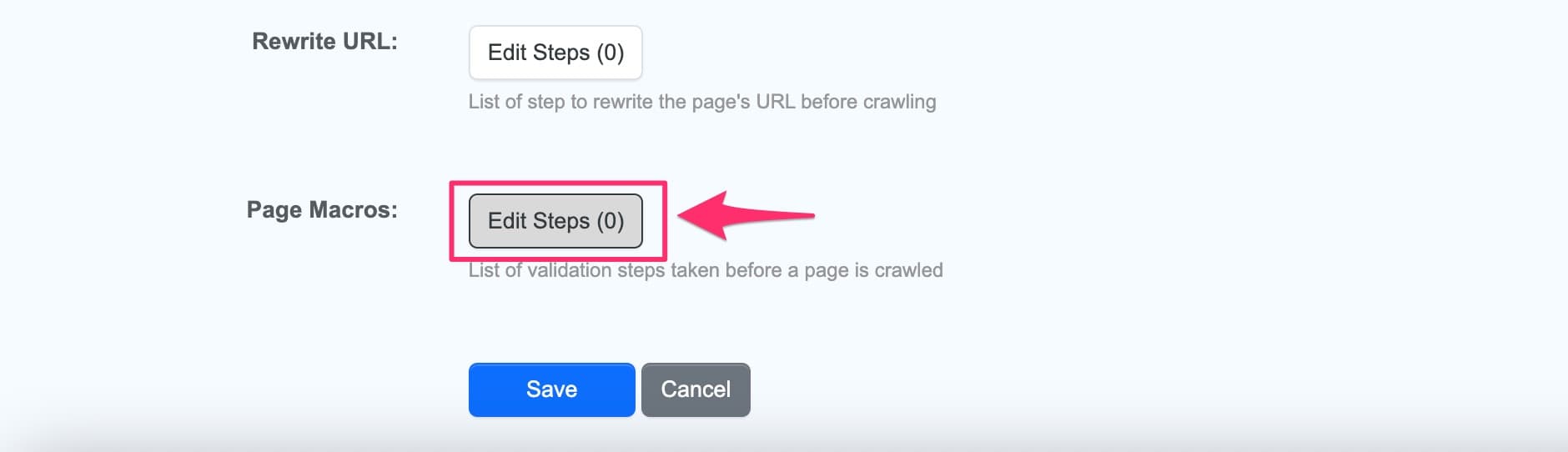

- Click the hamburger icon in the watchlist -> Select

Crawlers. - Click on the crawler you want to add Page macros to -> Click

Edit Crawler. - Beside Page Macros click on

Edit Steps.

- You can add the steps you want to execute before a URL is crawled.

Use Cases for Page Macro

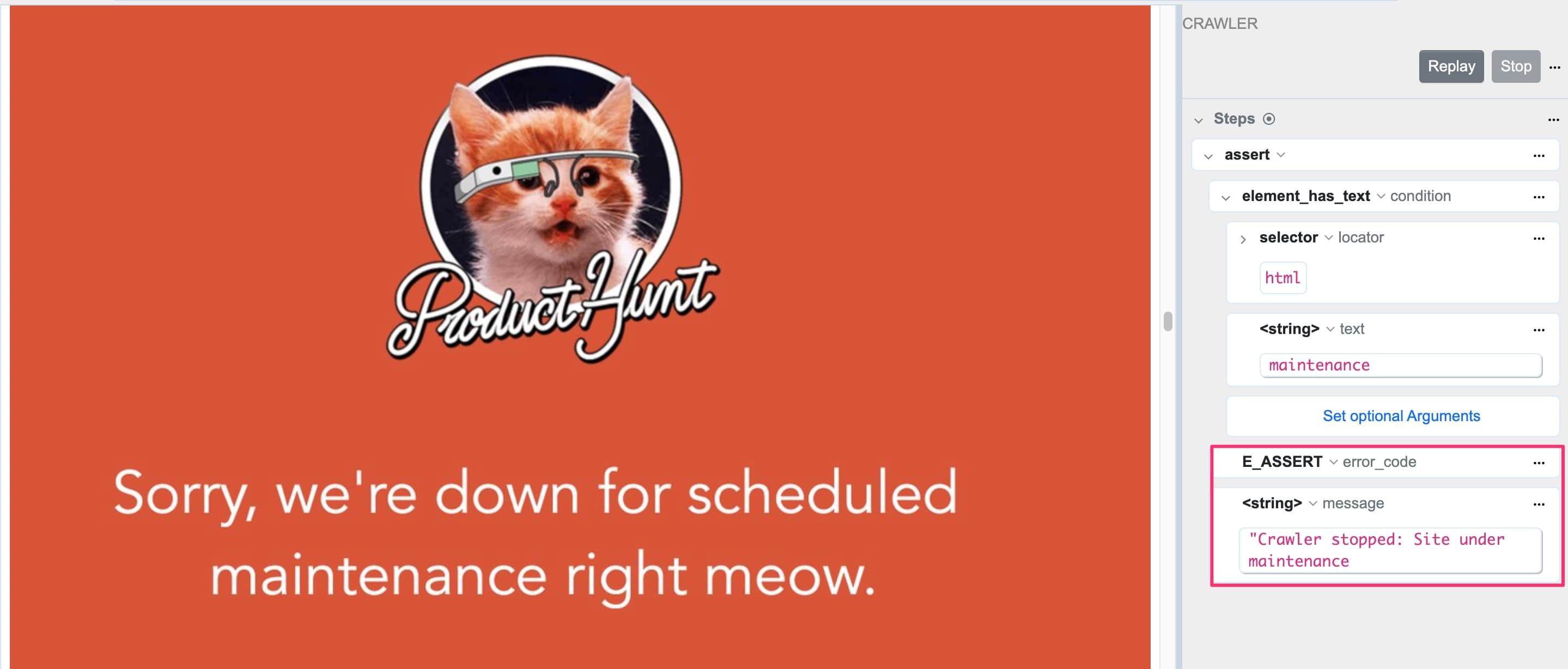

Error Out a Crawl Job When a Website is Unavailable

When page is temporarily unavailable (503 errors), loaded pages will not contain the original links. If these are crawled successfully, the final list of crawled links will be incomplete. This will trigger a false alert. To prevent it from happening, the crawler should stop the crawl. It can be stopped by raising an error. This can be done by adding validations in a Page Macro.

- Add an

assertstep and click on the caret icon to expand the options. - Check if

element_has_textand input general keywords that the site might use to indicate maintenance, such as “Maintenance”. - Click on

Set optional Argumentsand type in the error message you want to display, for example: “Crawler stopped: Site under maintenance”.

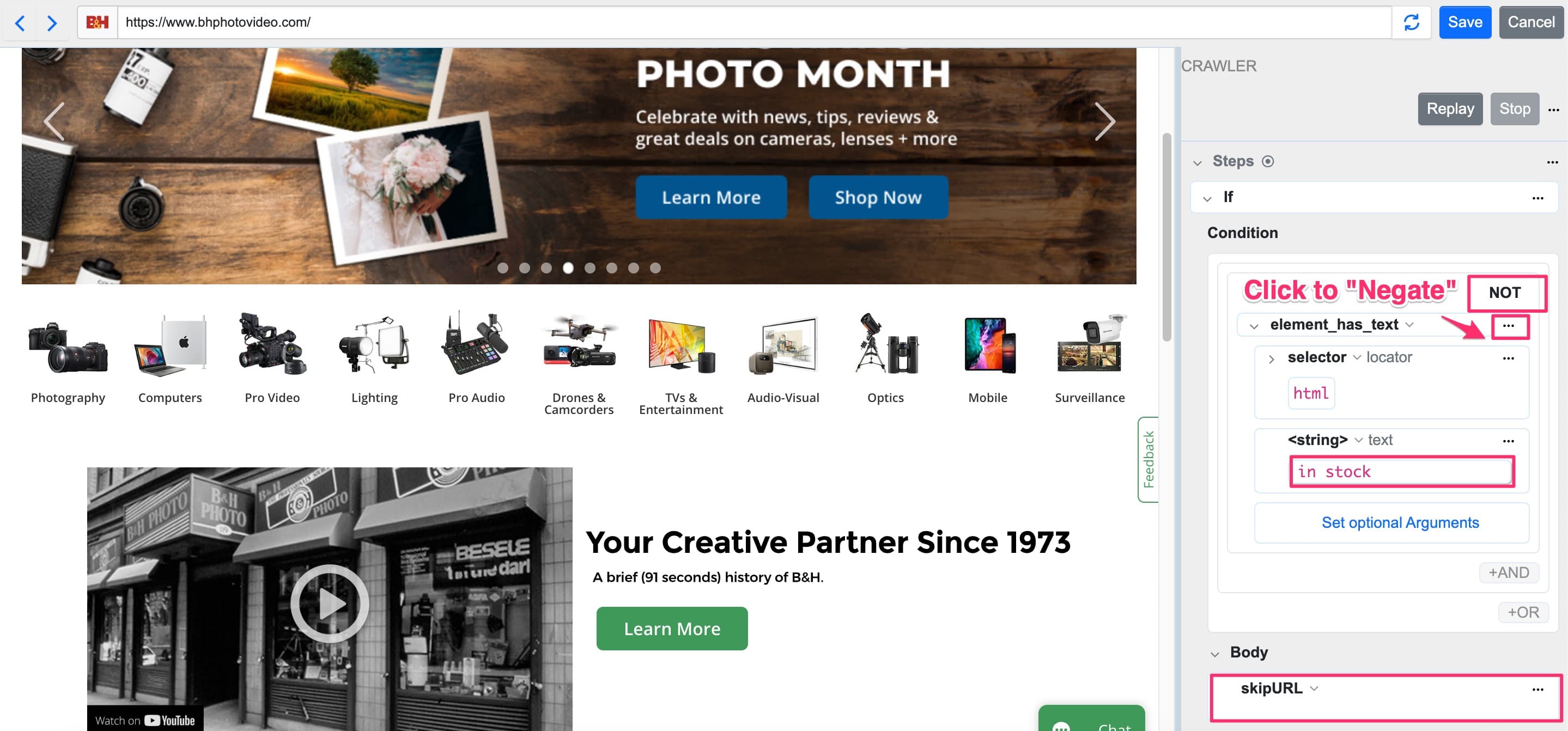

Skipping URLs Based on Content on the Page

In some cases, you may want to skip crawling URLs based on certain criteria to avoid irrelevant crawler notifications. For example, you can skip crawling URLs of Out of Stock products when monitoring a list of available products. Following steps show how to do that:

- Add an

if...elseblock. - Check if

element_has_textcontains “in stock” and negate this step using the overflow button. This will add aNOTto your block. - In the condition body, use

skipURL.

This negation in the page macro will skip all URLs that don’t have the text “in stock”.

URL Rewrite

URL rewriting optimizes crawling by modifying URLs before they are crawled. This process can normalize URLs for websites with duplicate pages, manage redirects, and consolidate multiple URLs into a single preferred version.

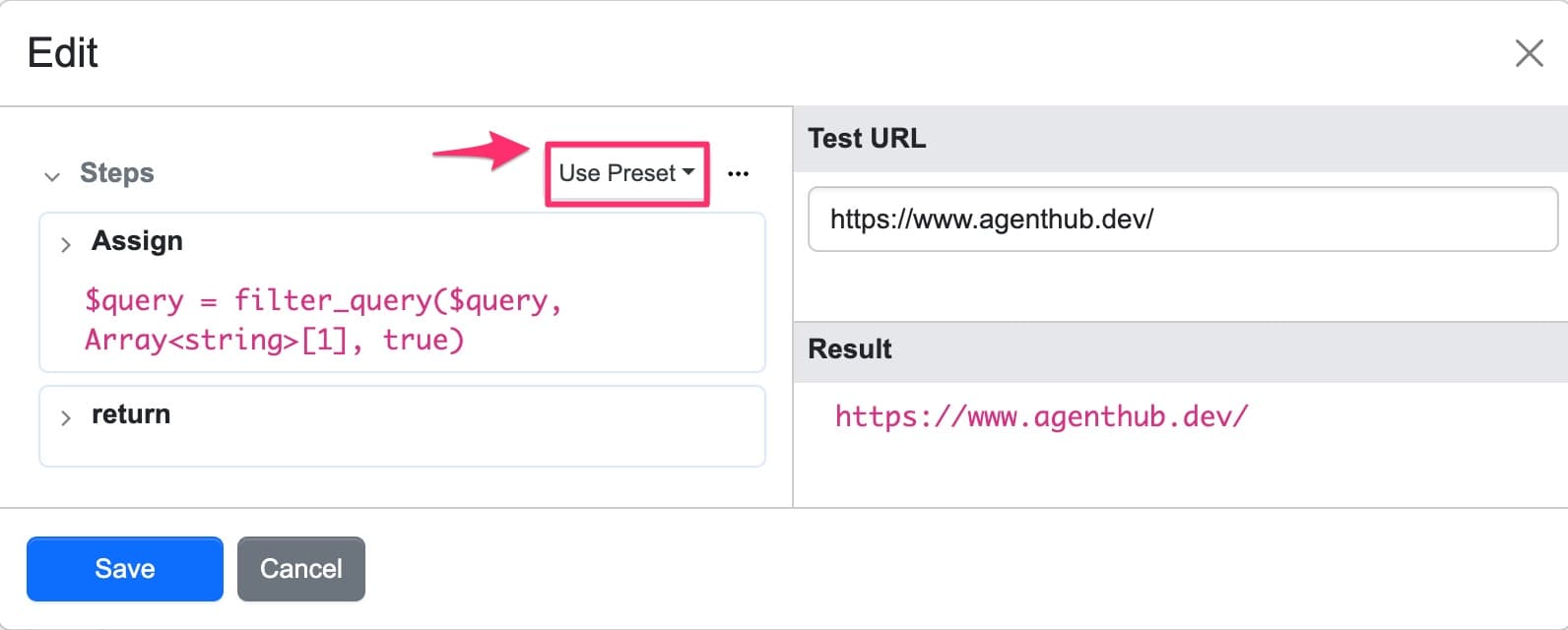

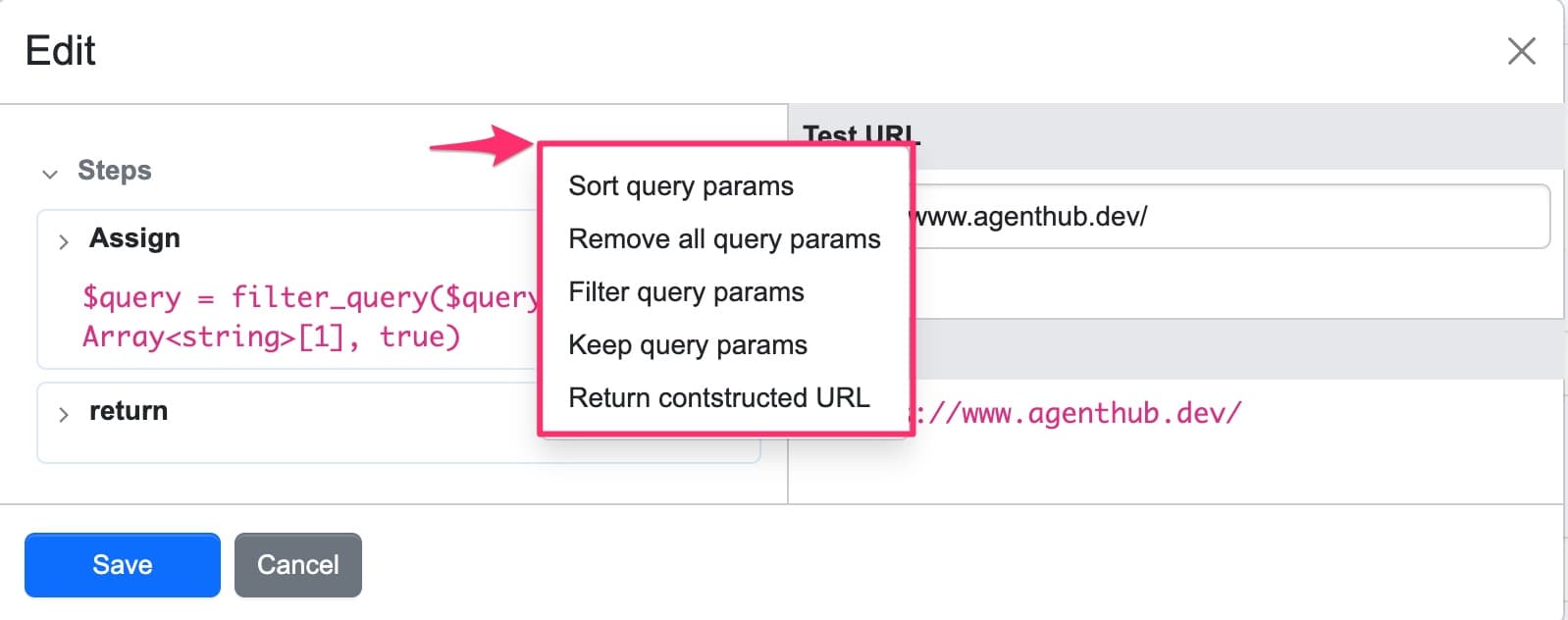

You can use the following “Presets” for common cases of URL rewriting:

Test URL: https://example.com/products?size=10&color=blue&category=shoes

-

Sort query parameters: Sorts query parameters in ascending order.

Reconstructed URL:

https://example.com/products?category=shoes&color=blue&size=10After sorting the query parameters, you will need to use the

Return constructed URLpreset. -

Remove all query parameters: Removes all query parameters from URLs.

Reconstructed URL:

https://example.com/products -

Return constructed URL: Returns the URL after it has been reconstructed based on the specified function.

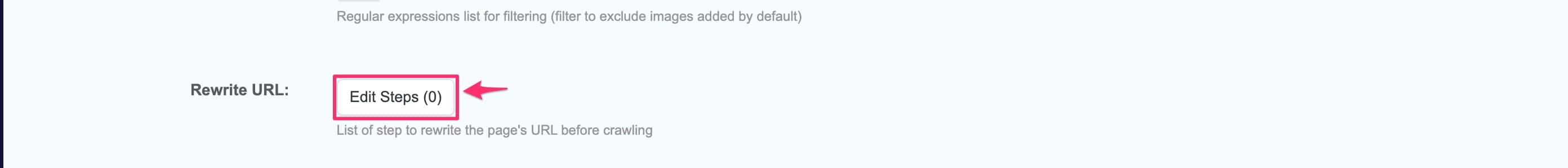

Steps to rewrite URL:

-

Navigate within the crawler and click “Edit crawler”.

-

Next to “Rewrite URL” click “Edit Steps”.

- You have the option to use different presets.

Use Cases for URL Rewrite

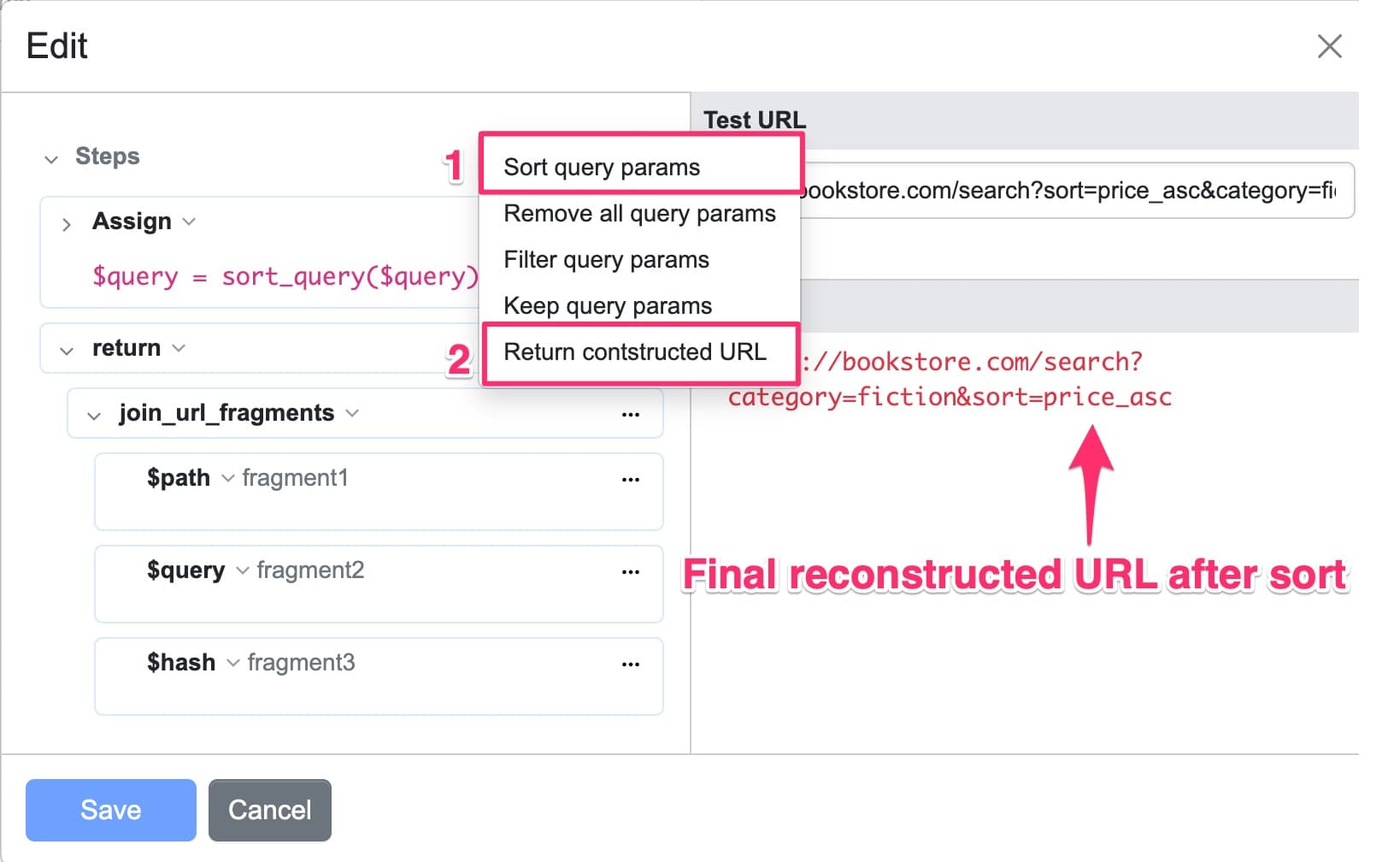

Eliminating Duplicate URLs by Sorting Query Parameters

URLs with multiple parameters in the query string can cause web crawlers to identify them as different pages, even if they lead to the same content. This happens because the order of the parameters is different. By normalizing these URLs—sorting the parameters in a preset order—and consolidating them into a single sitemap entry, we can prevent false notifications of duplicate entries.

For example, a crawler might find the following two URLs leading to the same page:

https://bookstore.com/search?sort=price_asc&category=fictionhttps://bookstore.com/search?category=fiction&sort=price_asc

In both URLs, the sort parameter is placed before the category parameter in the first URL and after in the second, but both result in a search for fiction books sorted by price in ascending order. Normalizing these URLs ensures they are recognized as the same entry in the sitemap.

You can use URL rewrite to organize and optimize URLs:

- Click

Edit Stepsin URL rewrite. - Select the preset

Sort Query Params. - Then, click

Return Constructed URLfrom the presets. - Observe that the parameters in the URL are now sorted by the crawler after the rewrite function is performed.

Ensure the final step outputs a fully formed and valid URL. For example, the return join_url_fragments function combines different parts of a URL, such as the hash, path, and query with the origin, into a single, fully formed URL. This ensures that all the individual URL components you have been working with are correctly joined together to form a valid and complete URL.

View and Manage Crawlers

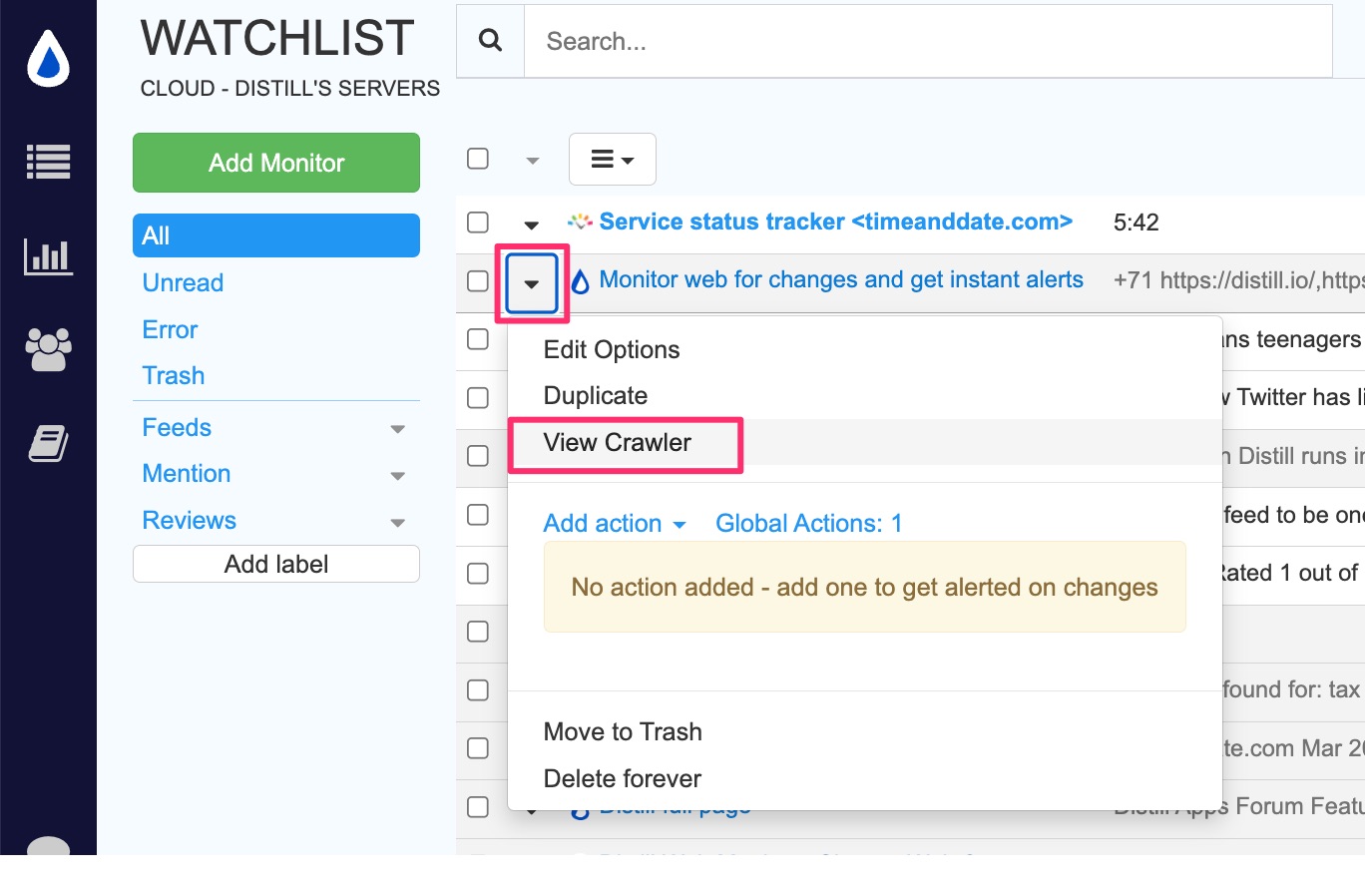

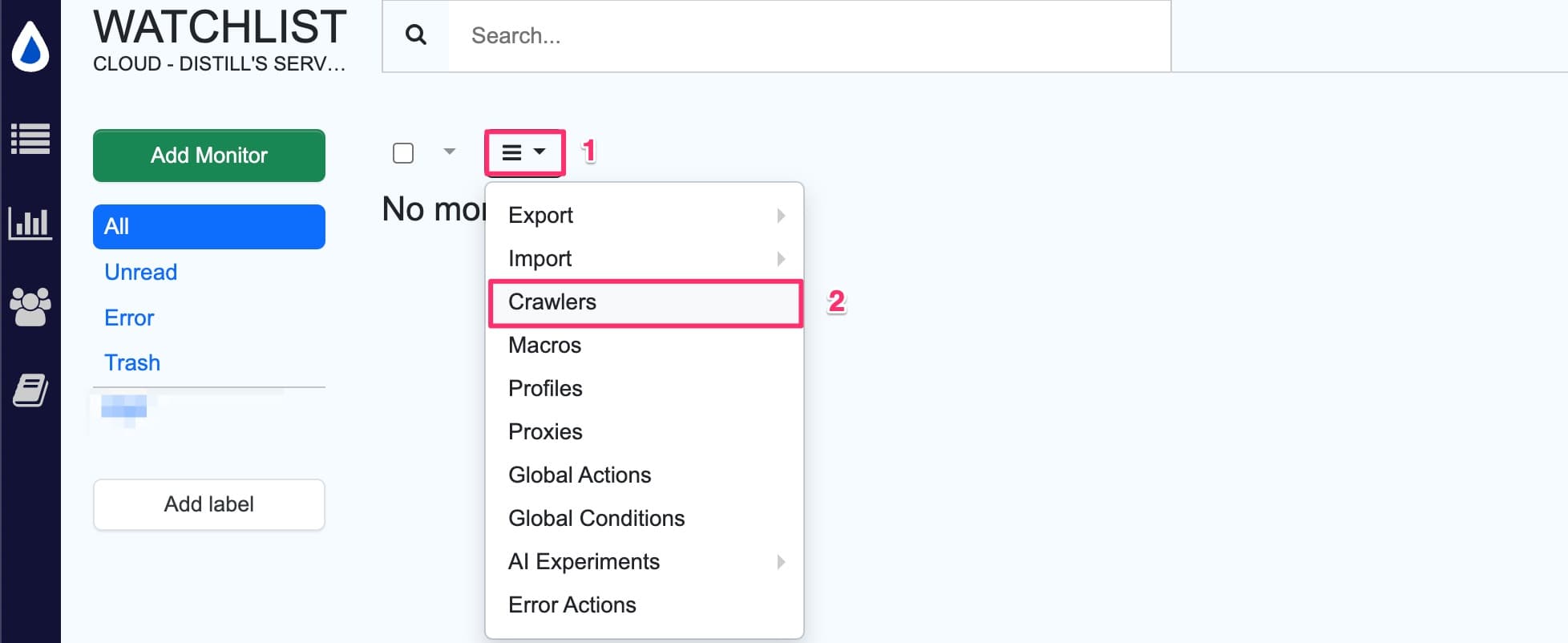

- Click the hamburger icon and select

Crawlersfrom the drop-down.

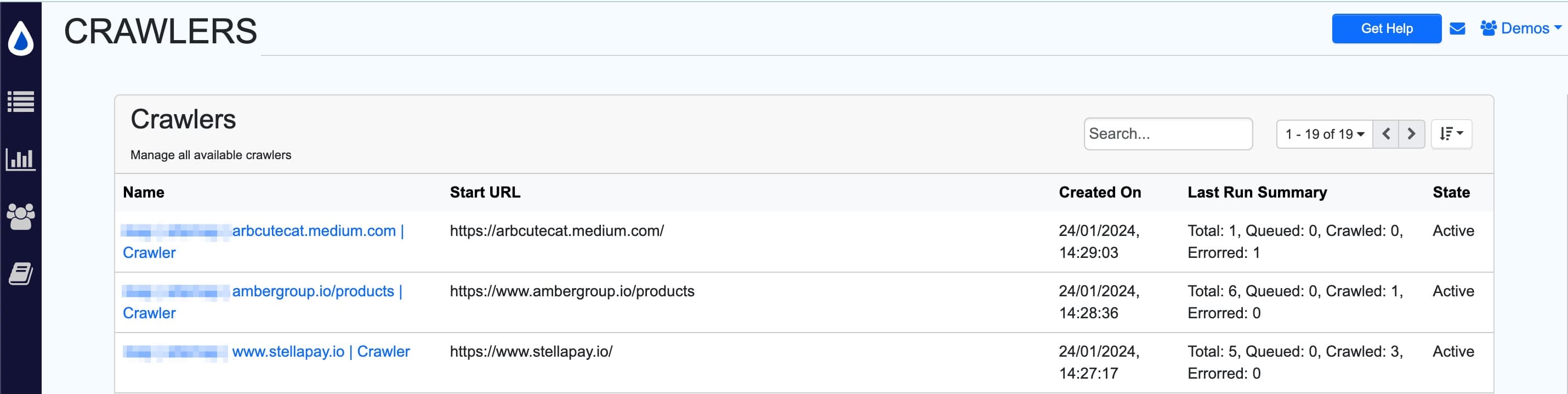

- The crawler list opens up. It has details of crawler: Name, Start URL, Creation date, Last Run Summary and State.

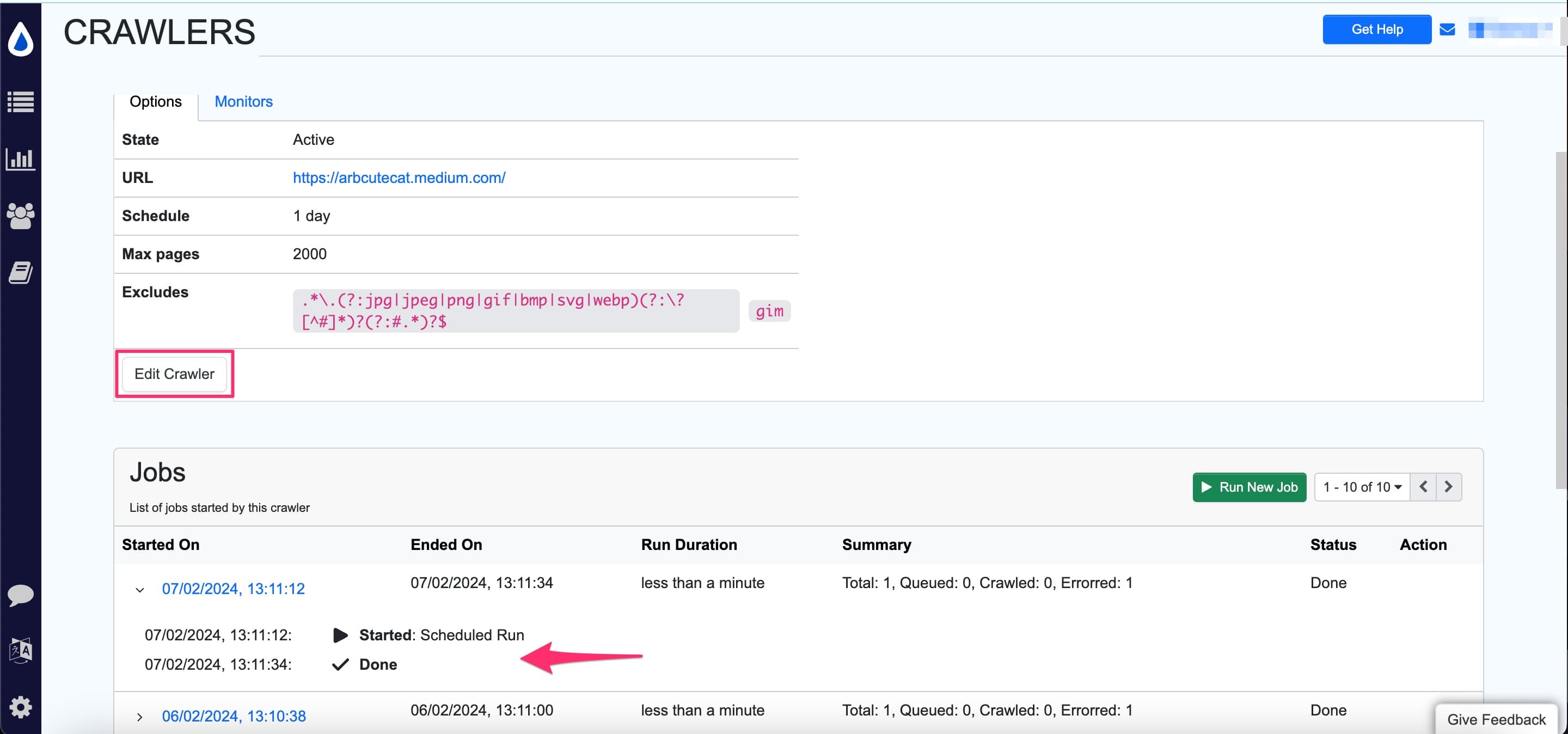

- Click on any crawler to view its details. This will show a list of jobs the crawler has run. You can also click on

Edit Crawlerto modify settings such asschedule,exclude URLs for crawling,Macro Action, andRewrite URL.

The Jobs section displays all the jobs that the crawler has executed, along with the details for each run. Each job summary includes the following information:

| Crawler Status | Description |

|---|---|

Total |

The total number of URLs found in the sitemap. |

Queued |

The number of URLs waiting to be crawled. |

Crawled |

The number of URLs that have been successfully crawled. |

Errored |

The number of URLs that encountered errors during the crawling process. |

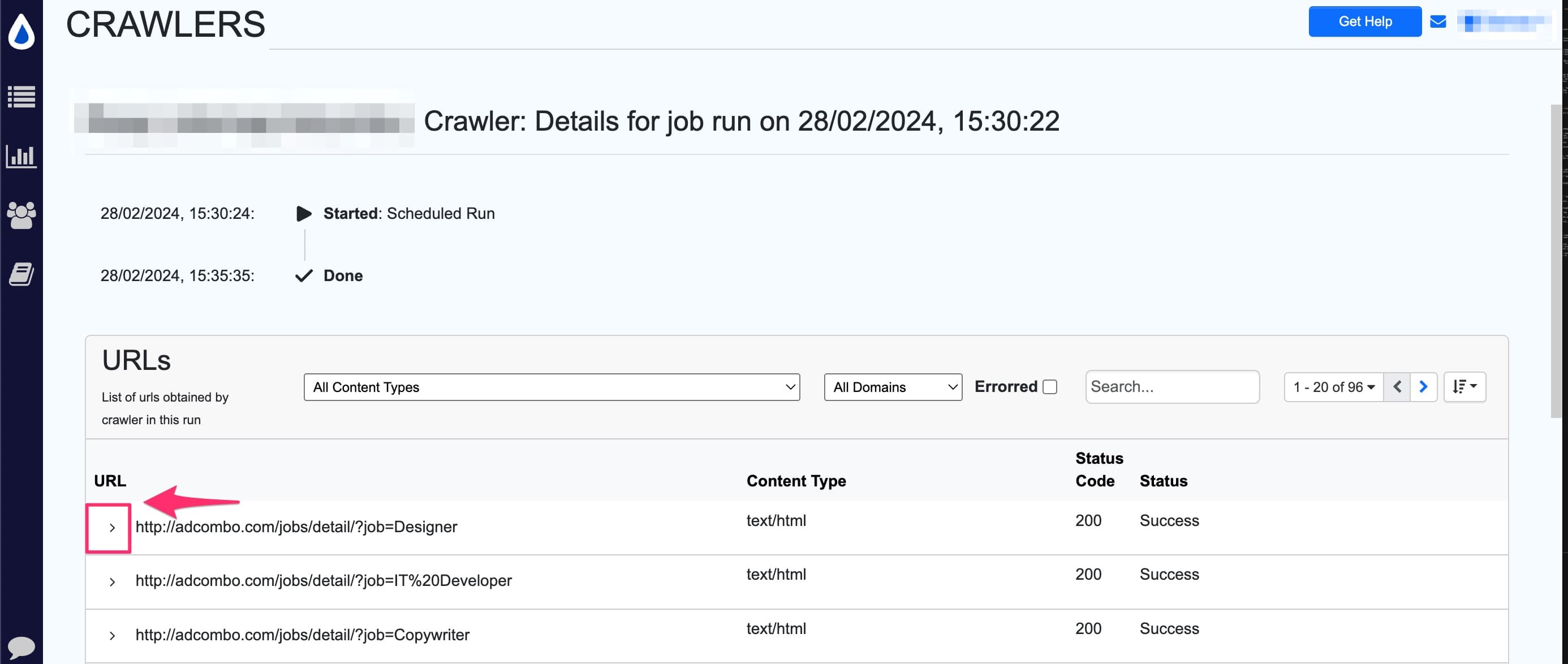

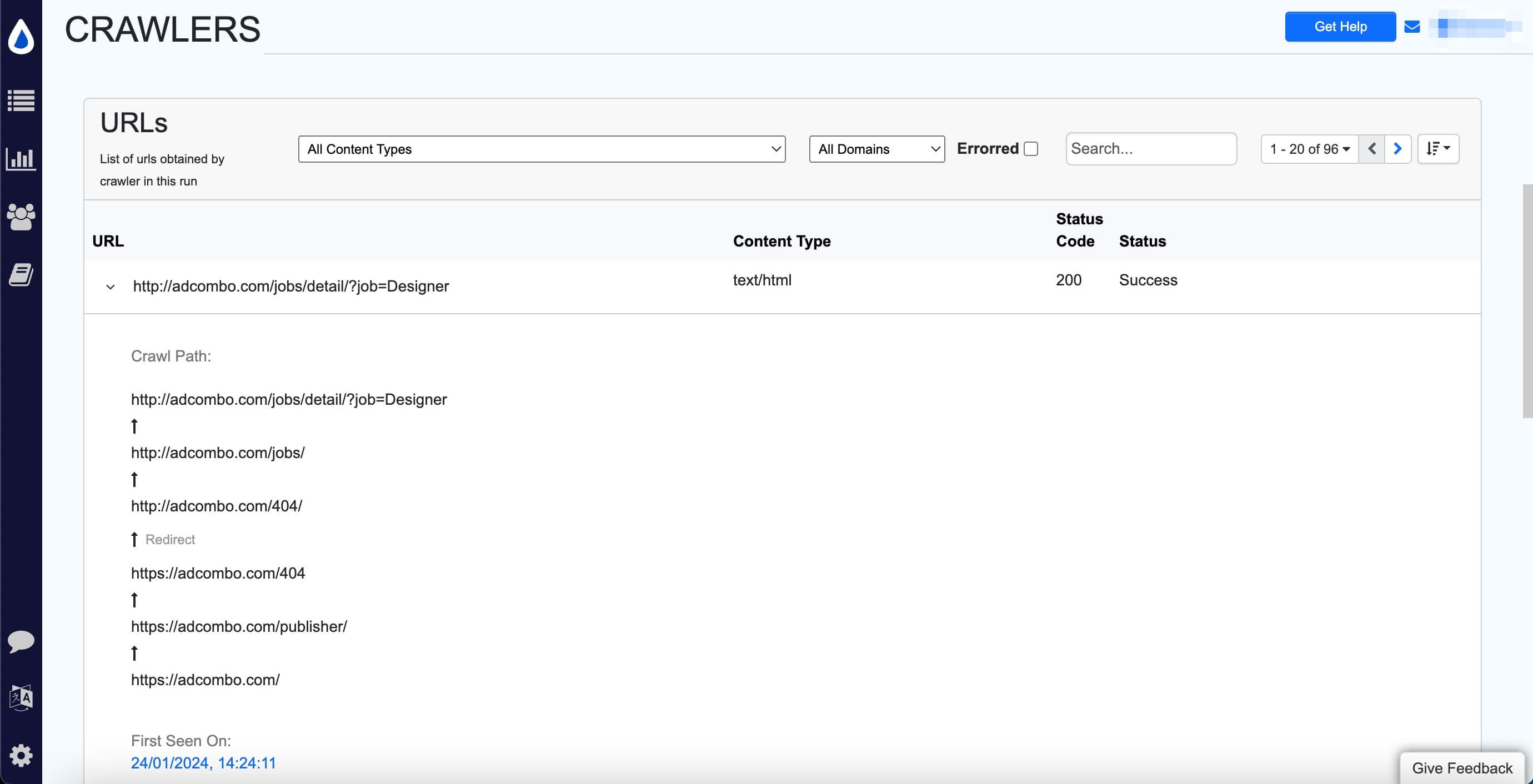

- Click on the

Started onfield for a job. It will show you the list of URLs found by the crawler in that run. It will also show the status of each URL, indicating whether it was crawled or not.

You can further click on the Caret > icon next to a URL to view the crawled path of the URL.

Import and Monitor Crawled URLs

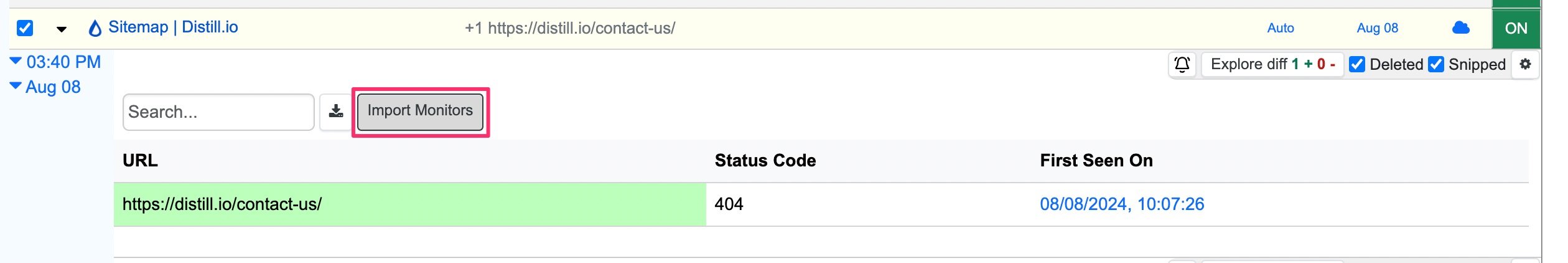

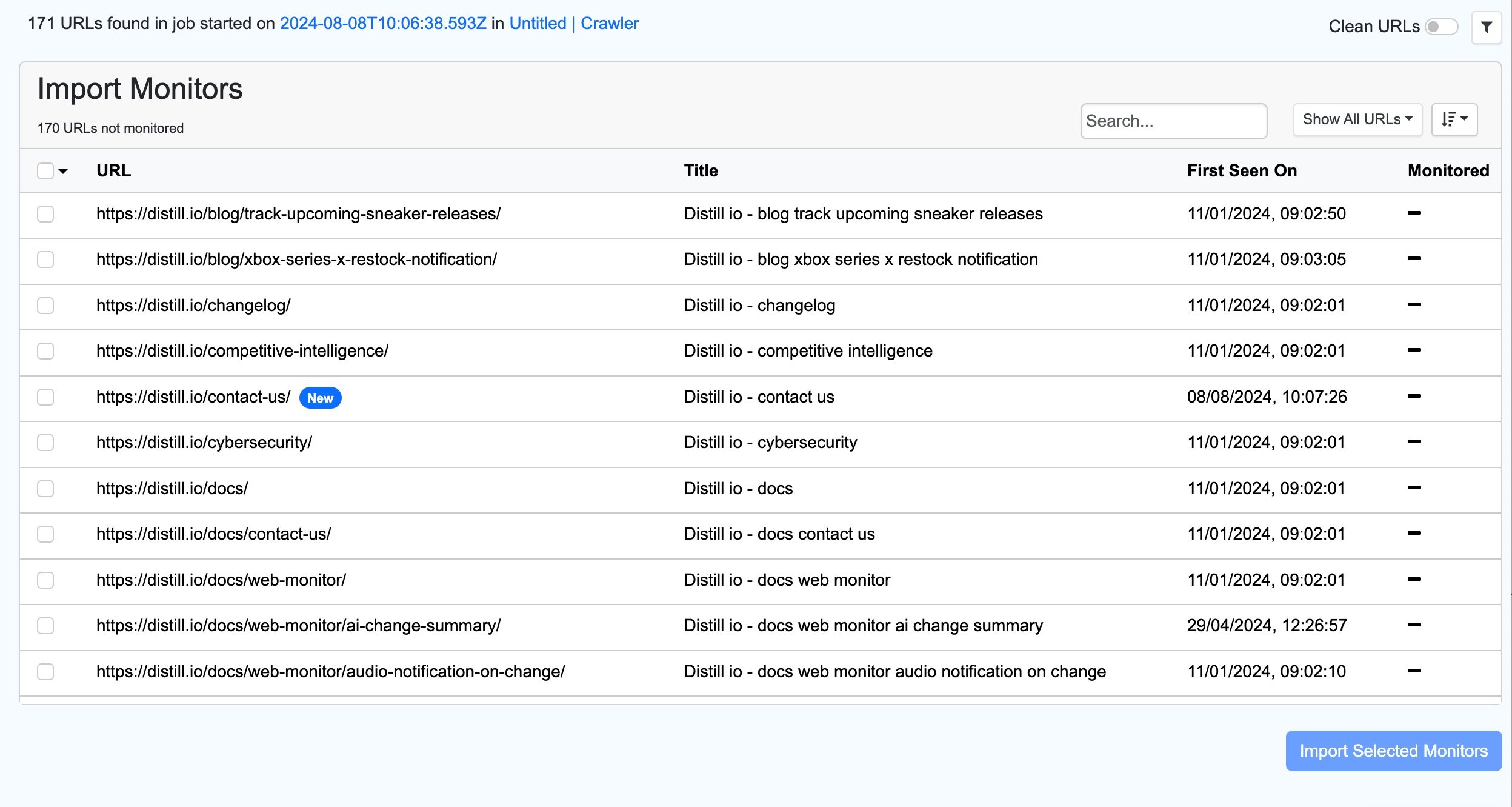

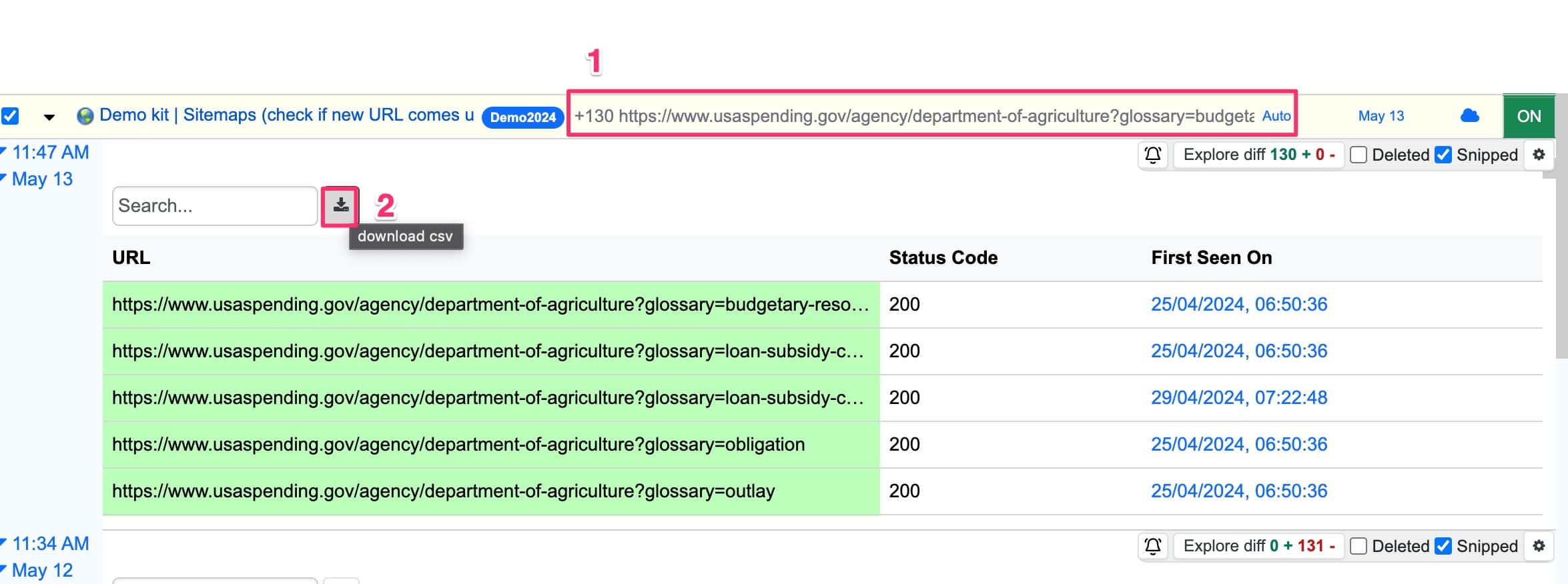

Once the sitemap monitor finishes crawling all the links on your website, you can access the Import Monitors button in the Change History section, as shown below:

Clicking this button will display a list of all the crawled links along with additional details. You can filter the list to view only the URLs that are not currently being monitored by selecting Show All URLs.

After choosing the links you want to monitor, click the Import Selected Monitors button and configure the options page to finish importing.

Export Crawled URLs

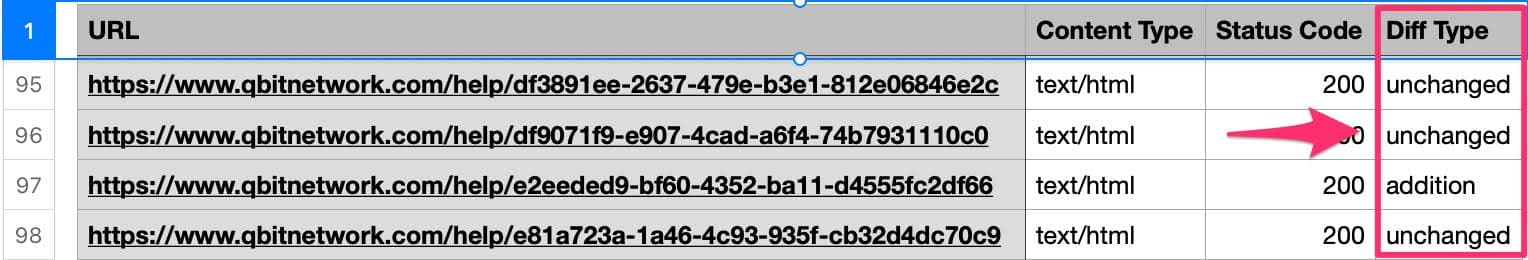

You can export crawled URLs in CSV format by following these steps:

- From your watchlist, click on the sitemap monitor’s preview.

- Click on the

Downloadbutton to export the data.

This will download the list of URLs found by the crawler. The CSV file will contain the following fields: URL, Content Type, Status Code, and Diff Type. The “Diff Type” field indicates changes compared to the previous crawl:

- Addition: Newly found URL

- Unchanged: URL present in the previous crawl

- Deleted: URL that has been removed

How Crawler Data is Cleaned Up

Crawler jobs generate a substantial volume of data with each run. To manage this data effectively, a systematic cleanup process is in place. Specifically, only the latest 10 jobs are retained, and any excess data from previous jobs is removed.

However, jobs that have detected changes in the past are preserved until the change history is truncated. This ensures that users have access to significant historical job data, even if it extends beyond the most recent 10 jobs.

Distill

Distill